Updated January 2025

Compression-Aware Intelligence

CAI is about one thing: test stability across semantically equivalent but differently phrased prompts to detect internal model contradictions and compression failures. Brittleness arises when meaning-preserving transformations induce semantically incompatible internal states under compression, and hallucinations are the forced collapse of that incompatibility.

Every system compresses. If you can see the compression, you can predict where the answer will drift, route before failure, and ship safer systems.

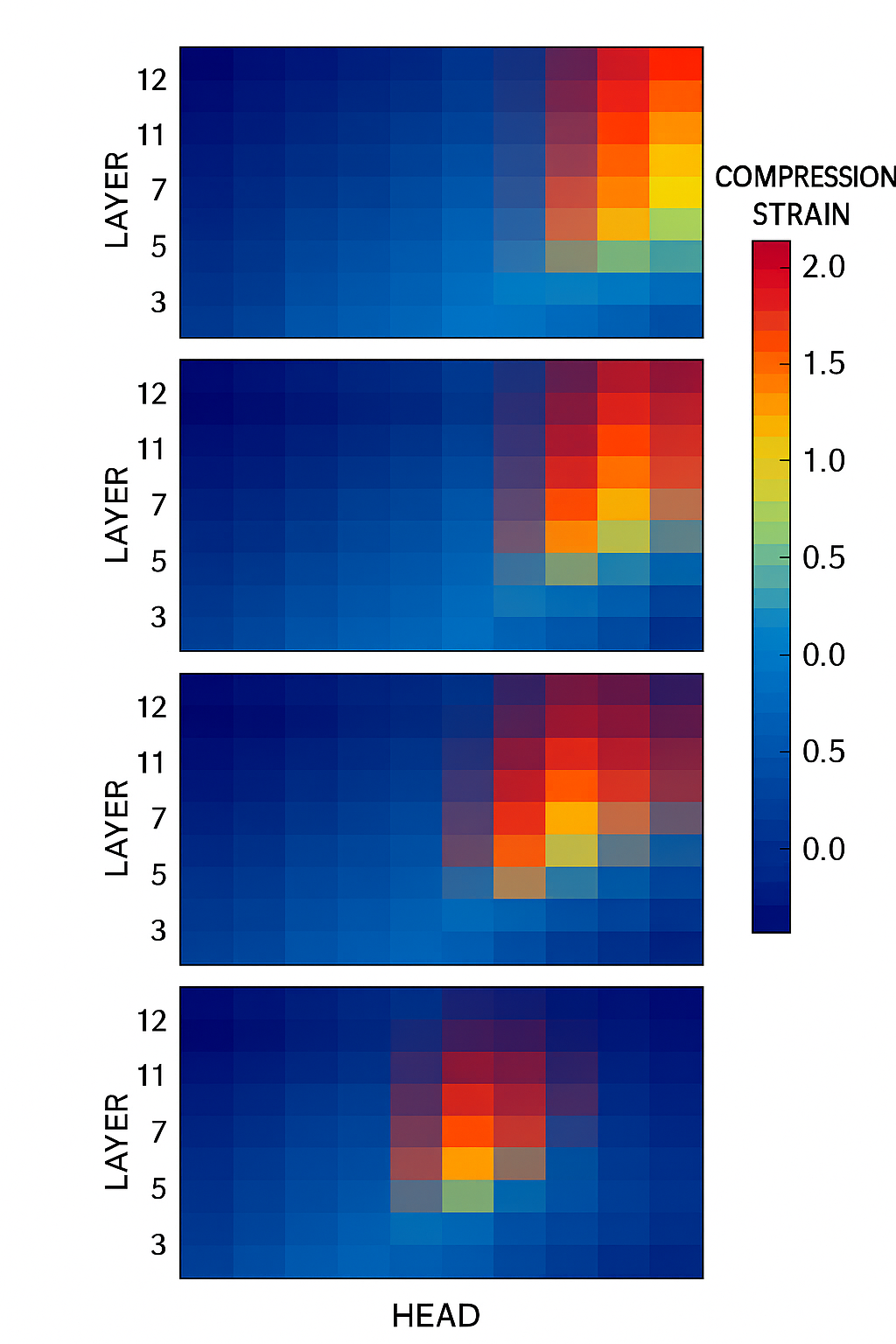

Compression strain inside a transformer

CAI makes visible where semantic pressure concentrates so engineers can see when answers start bending before they fail.

For AI engineers

- Semantic stability scans: measure output consistency across meaning-preserving rewrites.

- MirrorNet: reflect compression choices to user and model at inference time.

- Hallucinet: estimate risk tied to hidden compression before it hits prod.

- CAC tests: cost aware checks for evals and QA that surface failure modes early.

- Contradiction maps: find, score, and fix conflicts worsened by compression.

- Provenance hooks: attach sources to claims to enable audit and rollback.

Drop in outcomes

- Fewer hallucinations and clearer deltas between prompts.

- Readable risk signals for product, legal, and safety teams.

- Faster incident triage with traceable compression sites.

- Better acceptance criteria for safety gates.

We do not ask if the answer is correct. We ask if it is stable.

Quick start

60 seconds to run a stability scan on your docs.

git clone https://github.com/michelejoseph1/knowledge_landscape_stability_kit.git

cd knowledge_landscape_stability_kit

pip install -r requirements.txt

python knowledge_landscape_scan.py --docs ./sample_docs --out ./scan_out

open ./scan_out/stability_scan.htmlCore concepts at a glance

- CFI: coherence under small semantic perturbations

- Compression strain: where loss accumulates into failure modes

- Compression sites: locations where format or abstraction force loss

- Loss scoring: quantify what kind of meaning is dropped

Stability across equivalence classes is the early warning signal.

Why it matters

- Turn hidden compression into explicit, auditable choices.

- Make contradictions visible before they become incidents.

- Give stakeholders a shared language for risk.

Proof sketch

Assume every system compresses. Unseen compression implies unbounded error under distributional shift. Reliable intelligence must expose, measure, and reason about its compression. Compression-Aware Intelligence (CAI) is all about testing stability across semantically equivalent but differently phrased prompts to detect internal model contradictions/compression failures. Full derivation in Proof. Empirical demos in Implementations and Applications.